We’ve got some great development tools these days in Business Central but it’s still not possible to solve every problem with AL. This week I hit such a problem while developing a Javascript Control Addin to embed a third-party web application in Business Central SaaS. The problem was OAuth 2.0 authentication, or more specifically how to get an access token from a redirect URL.

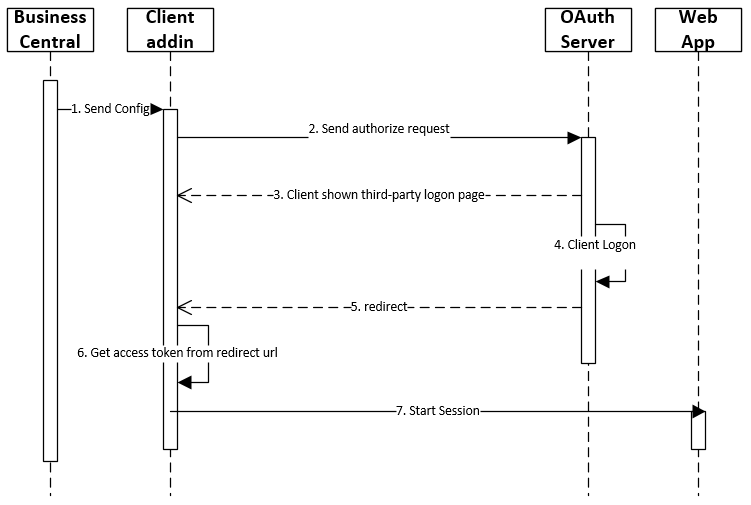

The third party web application required OAuth 2.0 implicit flow, for my use this was going to look something like this:

This is a slight simplification as we only need to authorize if our previously obtained token has expired, but this is not really the point of this blog post.. My problem was 5. redirect; this is a url the authentication server will redirect our users’ browser to (in this case the src of the iFrame used by the Control Addin). The data we need is in the url generated by the authentication server which will need to be stripped off (6) and used in following interactions with the third-party web app.:

GET $base_url/api/oauth2/authorize?client_id=$client_id&response_type=token&redirect_uri=$redirect_uri ==> Location: $redirect_uri#expires_in=3600&token_type=Bearer&scope=$scope&access_token=$token

So how do we handle this requirement in Business Central? The authentication server needs a URL ($redirect_uri) from us which it will use to send the access data after the user has logged in. This redirect service will then need to redirect the user back to our application. Well the answer is we can’t. There is no way for us to create a web service in Business Central that will handle such a request. We need to build a service to accept an HTTP GET request and extract the query parameters to use in our application (technically the parameters after the redirect url are not query parameters; note the use of # rather than ?.. more on this later), and then load the web app we want to authenticate with into our Control Addin iFrame.

Using an Azure Function as an OAuth 2.0 redirect url

The great news is Microsoft has an offering (which I’ve been trying to find an excuse to use for some time!) called Azure Functions. Azure Functions allow you to quickly (and cheaply) deploy functions as web services to be used by other applications.

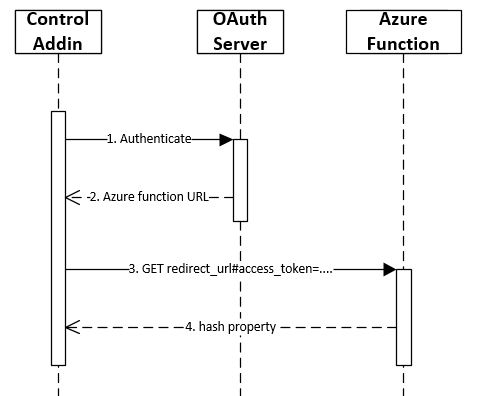

I needed an Azure Function, that when called would send the data (hash property) in the url to my Control Addin:

- The iFrame src is set to the OAuth authentication url, and the user logs in with their credentials.

- On success, the authentication server will redirect to our Azure Function url with the access details in the url hash property.

- The Azure function will load in the Control Addin iFrame.

- The Azure Function sends the hash property back to our application

- Decode the hash property to extract the access token

Hash Property vs. Query Parameters

I made the distinction earlier, that what we’re trying to extract from the redirect url is not query parameters, but the location hash property. This distinction is important because it affects how the data is retrieved by our Azure Function.

$redirect_uri#expires_in=3600&token_type=Bearer&scope=$scope&access_token=$token

A hash property in a url starts with a # character, whilst query parameters follow a ? character. The fundamental difference is that the hash property is only available to the browser and is not sent to the web server. This means that we’ll need to use client side scripting to retrieve the data.

Note: OAuth 2.0 does not always send data using a hash property, it depends on the flow you’re implementing.

Implementing the Azure Function

The Azure function is incredibly simple, all we are doing is receiving a request and sending the hash property to the Control Addin. I used a node.js based function, simply because I was already using Javascript and AL for this project and didn’t feel the need to add a third language 🙂

Step 1 – get the hash property:

let hashString = window.location.hash;

Step 2 – send the hash property to the Control Addin for processing:

This takes a little more thought. You may be tempted down the lines of sending the hash value back to Business Central via a web service. Definitely do-able.. but as our Azure function is running inside the iFrame in our Control Addin we can simply use client-side window messaging to post the value back to our main window for processing:

window.parent.postMessage(msg, "*");

The above Javascript code is posting a message to the parent window, which will be our Control Addin.

The receiving window will need to know what the message is in order to process it. I create the msg variable as a Javascript object, so I can pass through some additional information:

let msg = {

type: "xp.authentication",

hash: hashString

};

My message now has a type value and a hash value which I’ll be able to pick up in my Control Addin code.

Of course this is client-side Javascript (remember the hash property is only available to the browser), and will need to run inside the iFrame when the Azure Function is invoked. This means our Azure Function will need to return this code for the browser to execute. I did this by creating a simple HTML document as a string and passing it back as the response body. The full Azure Function code looks like this:

module.exports = async function (context, req) {

const responseMessage = '<html><script>' +

'let hashString = window.location.hash;' +

'let msg = {' +

'type : "xp.authentication", ' +

'hash : hashString ' +

'}; ' +

'window.parent.postMessage(msg, "*"); ' +

'</script>' +

'<h1>Getting access token...</h1></html>';

context.res = {

headers: { 'Content-Type': 'text/html' },

body: responseMessage

};

}

Retrieving the hash in the Control Addin

The final part of the jigsaw is to pick up the message sent by the Azure Function. This is done using browser events.

Within our Control Addin code we can add an event listener to a message event as follows:

window.addEventListener("message", function (pEvent) {

if (pEvent.source !== iFrame.contentWindow)

return;

handleMessage(pEvent.data);

});

The above code will use an anonymous function as an event listener to the message event. I’m using message events to communicate with the third-party web app as well so the above code send all message event data that has come from our Control Addin iFrame to my handleMessage function:

function handleMessage(pMessage) {

//redirect token received?

if (pMessage.type === "xp.authentication") {

decodeAuthHash(pMessage.hash);

iFrame.src = 'https://the-url-of-third-party-app.com';

}

// ... more event "types" picked up here

}

Now you can see why it was important to give the msg variable a type in my Azure Function. If I find the message is of type xp.authentication then I will try and process the accompanying hash property using the decodeAuthHash() function. I’m then switching the iFrame src to the third-party application url specific to my solution.

From here we can extract the required fields out of the hash string for use in our application. I like to create a JSON object to hold the data as it’s convenient format to use:

function decodeAuthHash(authHash) {

if (authHash === '' || authHash === undefined) {

return;

}

authHash = authHash.replace('#', '');

let hashPairs = authHash.split('&');

const hashJson = JSON.parse('{}');

hashPairs.forEach(function (hashPair) {

let splitPair = hashPair.split('=');

hashJson[splitPair[0]] = splitPair[1];

});

tAccessToken = hashJson;

}

I’m assigning the JSON variable to a global variable tAccessToken to use in further functions. I can then retrieve the access_token as follows:

let accessToken = tAccessToken.access_token;

See what I mean about JSON being a convenient format in Javascript? You can use it like any other object with properties, no need to find the key and get the value as a JsonToken like we do in AL.. the code is much cleaner. That said, I’m no Javascript expert so please let me know if you have a more elegant solution!

That’s it, thanks for reading.